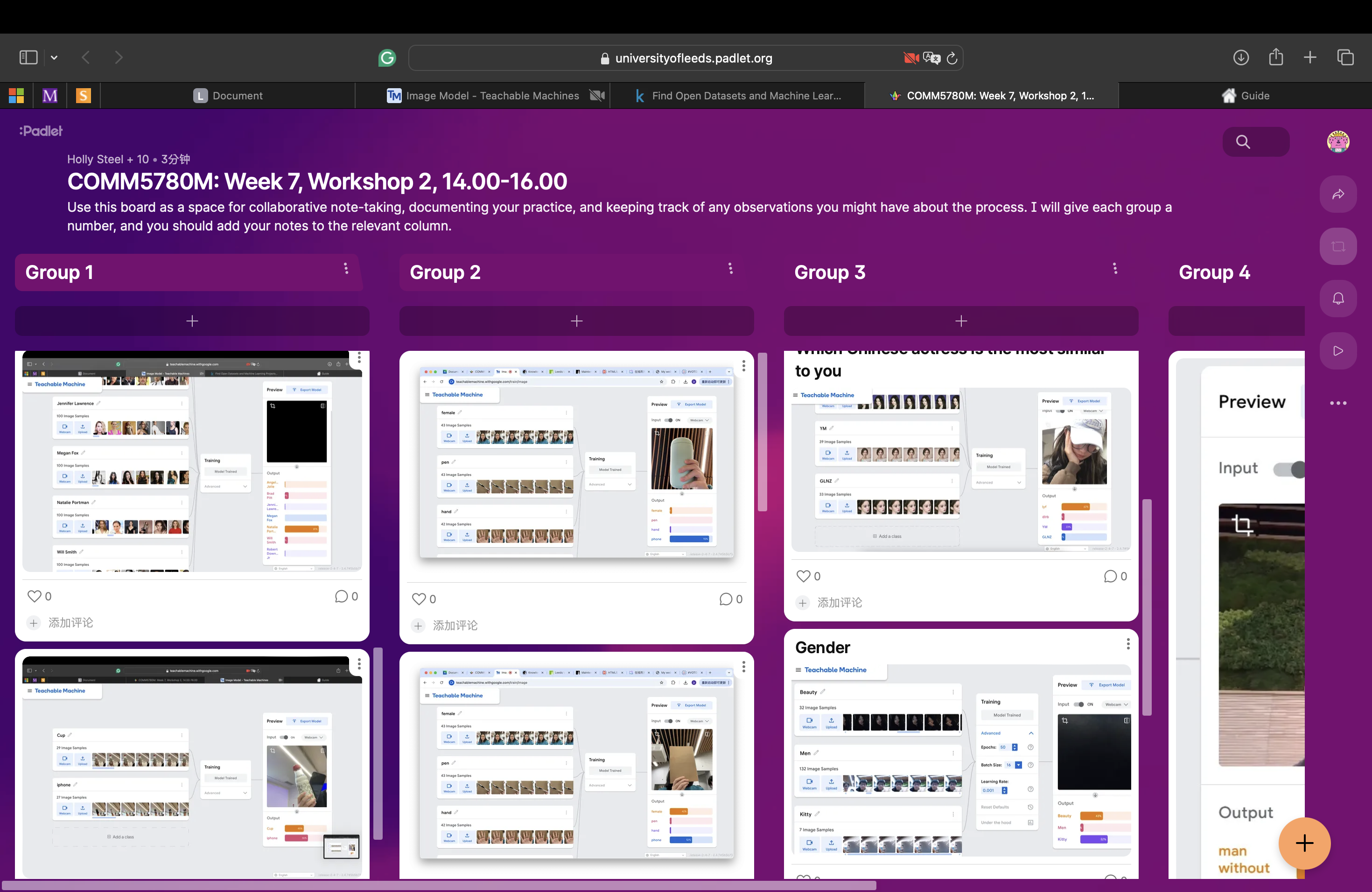

By using Teachable Machine, we trained the model, tested the model, and improved the model. At first, I only uploaded photos of a water cup and a mobile phone, and divided them into two classes. When the corresponding objects appeared in the screen, the model could quickly distinguish the two objects. However, when I appeared on the screen, the model classified me as a mobile phone. This may be because I was wearing black clothes today, which is the same color as my mobile phone. After adding the class and photos about me and retraining the model, it can also recognize me. This shows that the more data is input, the higher its accuracy. I found that it would be misled by the same color or similar shapes.

Then, I used the celebrity face data photos from the Kaggle public dataset to improve and retrain the model. Since the celebrities in this dataset are all Western celebrities, all the indexes of the model kept floating when I appeared on the screen. Since it didn't have my relevant face data, it couldn't match me with any Western celebrities. In addition, when my group member Danny appeared on the screen, he matched the black celebrity the most. This is probably because there is only one black celebrity in this data set, and his skin color is close to Danny's.

Through these examples, we can find invisible stereotypes and racial distinctions. This is because the model can only respond to the data we input, and the data often contains people's thoughts and ideas. To address these difficulties and challenges, perhaps adding more photos of different races to the data set is a solution. But we should also pay attention to whether this will further amplify this dilemma. These examples relate to this week's readings, the racialization in the videos and materials, and the facial recognition technologies for black people. We can’t rely on the module and people need to be aware of this fact.